The introduction to the AI Act

This year marked a significant advancement in the regulation of AI-powered devices within the European market with the introduction of the AI Act. As the first comprehensive regulation on artificial intelligence, the AI Act has established a benchmark for future policies, shaping the direction of AI governance on a global scale. The Act applies to a wide range of AI technologies across various industries, classifying products based on their assessed risk of causing harm [1]. These categories are Unacceptable, High, Limited, and Minimal Risk. Each category comes with specific compliance frameworks, ensuring that products meet the necessary safety and ethical standards. Notably, the Act prohibits all devices classified as posing an Unacceptable Risk.

The AI Act was first proposed by the European Commission on April 21, 2021 [2]. After nearly three years of deliberation, it was passed by the European Parliament on March 13, 2024, and subsequently received unanimous approval from the EU Council on May 21, 2024. The Act officially came into force on August 1, 2024, encouraging voluntary compliance while establishing strict deadlines for companies to meet the obligations set by the new legislation. Full enforcement of the Act is scheduled to begin on August 2, 2026.

AI in pathology

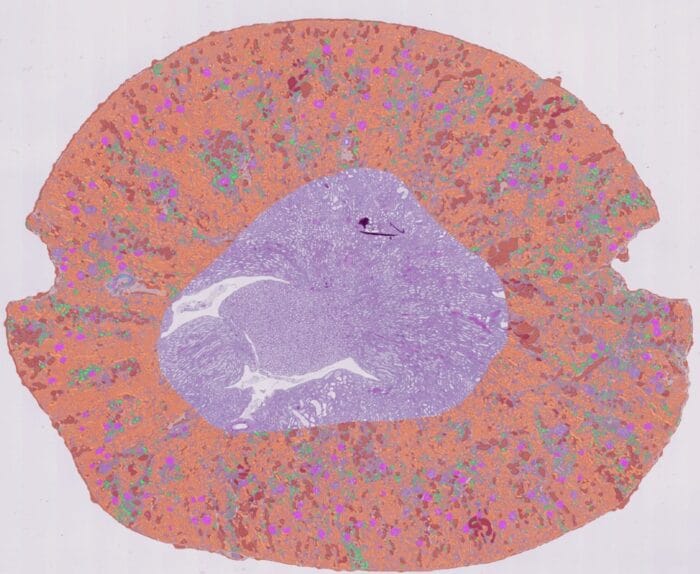

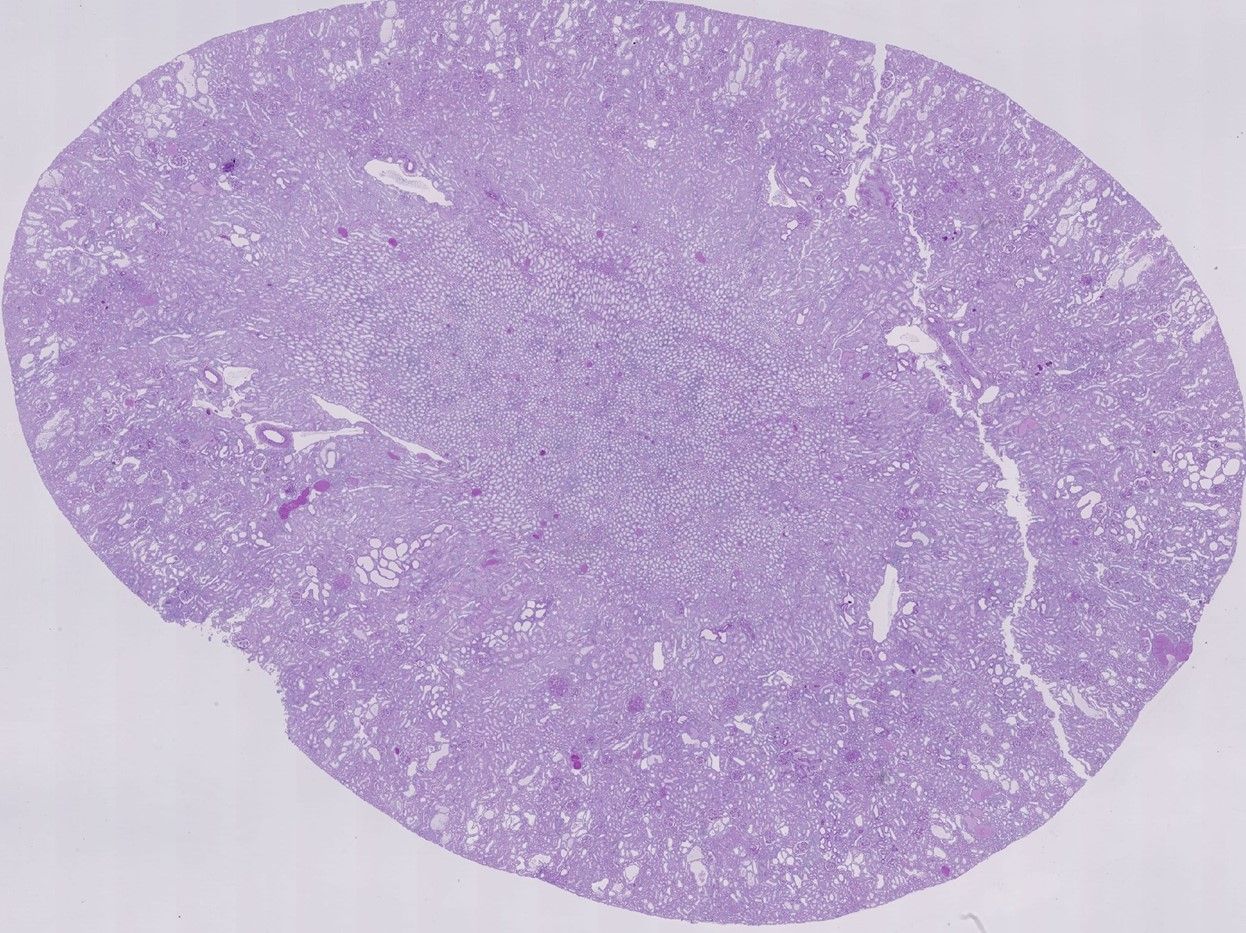

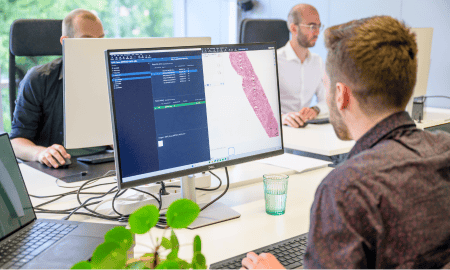

Pathology has undergone remarkable changes driven by technological advancements. The development of brightfield and fluorescent slide scanners has fundamentally transformed the field by allowing the complete virtualization and digitalization of glass slides [3]. This shift has given rise to digital pathology, which modernizes and refines traditional methodologies. Digital pathology now involves converting histopathology, immunohistochemistry, and cytology slides into digital formats using whole-slide scanners. It also encompasses the subsequent interpretation, management, and analysis of these digital images through sophisticated computational techniques.

This method offers numerous advantages, including the ability to work remotely, improved storage solutions via cloud platforms, and the integration of automated review processes through data algorithms [3]. These advancements reduce the time and human effort required for analysis. Many of the solutions currently available on the market leverage machine learning technologies to automate and optimize the pathology workflow, aiming to enhance consistency, efficiency, and ease of assessment.

The implications of the AI Act on digital pathology solutions

As digital pathology evolves to integrate AI algorithms, machine learning technologies, and smart solutions, the AI Act is poised to have a significant impact on the field.

The classification of devices under the AI Act depends on their specific use and definition, which can place them in different risk categories. Medical devices and in vitro diagnostic devices used within the European Union, particularly those regulated under the Medical Device Regulation (MDR) and In Vitro Diagnostic Regulation (IVDR), are automatically classified as high-risk [4]. This classification mandates a third-party conformity assessment to ensure compliance with the safety and performance standards set by the legislation. Therefore, AI-powered devices in healthcare will be held to higher standards of transparency. Developers and companies will be required to provide clear documentation detailing the training, validation, and development processes of their algorithms.

An essential evolution of AI systems is the emphasis on increased transparency and explainability. In healthcare, it is crucial that AI systems are understandable to clinicians, patients, and other stakeholders. Despite AI’s reputation as a ‘black box’—where the decision-making process is often inexplicable—the goal is to develop AI systems that are not only highly effective but also transparent in how they reach their conclusions. This clarity is vital in giving healthcare professionals the confidence to rely on AI in critical diagnostic contexts. The AI Act plays a pivotal role in this evolution by mandating continuous monitoring and rigorous assessments to ensure compliance with its guidelines [5].

Additionally, the AI Act further strengthens the focus on patient safety and data integrity—two of the most critical considerations in healthcare AI [1]. Given the sensitive nature of patient data, there is a strong need for a standardized and regulated framework to maintain high standards of data protection. The AI Act addresses this need by establishing clear guidelines and requirements to ensure the safety and security of data used in AI systems, thereby fostering greater trust in these technologies.

Finally, the AI Act advocates for a more responsible and ethical approach to AI deployment. A key principle to this legislation is to uphold the European Union’s fundamental rights [6], aiming to reduce discrimination and inequalities across various sectors, including healthcare. By mandating increased transparency and additional assessments, the AI Act ensures that biased data is not used in the algorithmic training, preventing AI models from unfairly discriminating against certain groups of patients.

Overall, the AI Act establishes a clear and standardized framework for the development of AI systems. It represents a significant advancement towards more transparent and secure use of algorithms in healthcare, ensuring that all developers comply with both ethical and technical requirements.

The timeline of the AI Act legislation

Sources:

- https://artificialintelligenceact.eu/high-level-summary/

- https://www.whitecase.com/insight-alert/long-awaited-eu-ai-act-becomes-law-after-publication-eus-official-journal

- https://www.sciencedirect.com/science/article/pii/S0023683722006468

- Artificial Intelligence and Medical Devices – Compliance with the AI Act | Fieldfisher

- Transparency requirements under the EU AI Act and the GDPR: how will they co-exist? | Fieldfisher

- What are fundamental rights ? | European Union Agency for Fundamental Rights (europa.eu)

More Blogs

-

Navigating the IVDR: How Aiosyn prioritized compliance from day one

26 May, 2025 • By Diana Rosentul

Read more -

Case Study: Automating Radboudumc’s digital slide Quality Control with AiosynQC

19 December, 2024 • By Anna Correas Grifoll

Read more -

Case Study: Leveraging AI for quantitative assessments of kidney health in diabetic animal models

12 December, 2024 • By Anna Correas Grifoll

Read more -

AI assistance in Chronic Kidney Disease monitoring

03 July, 2024 • By Anna Correas Grifoll

Read more -

Behind the scenes of pathology AI innovation – Blazej Dolicki’s insights into training, validation, deployment, and beyond

18 June, 2024 • By Victoria Grosu

Read more -

A short overview of the history of pathology: origins, early days, and the transition to novel technologies

16 May, 2024 • By Anna Correas Grifoll

Read more -

The benefits of AI implementation in mitotic figure counting

11 April, 2024 • By Victoria Grosu

Read more -

Diana Rosentul explains the complexities of regulatory compliance for integrating AI-based solutions in the healthcare industry

25 January, 2024 • By Diana Rosentul

Read more